It was the first week of the new year and in the spirit of resolutions and new beginnings, I wrote down my all my goals I could think of. After some refining, one of my goals was to take an online class. I had a few classes in mind, and settled with the class that had the earliest start date. Now that my class came to a close, I want to share with you my tips on surviving and how to get the most out of it.

Iconic pencils that you won’t be needing.

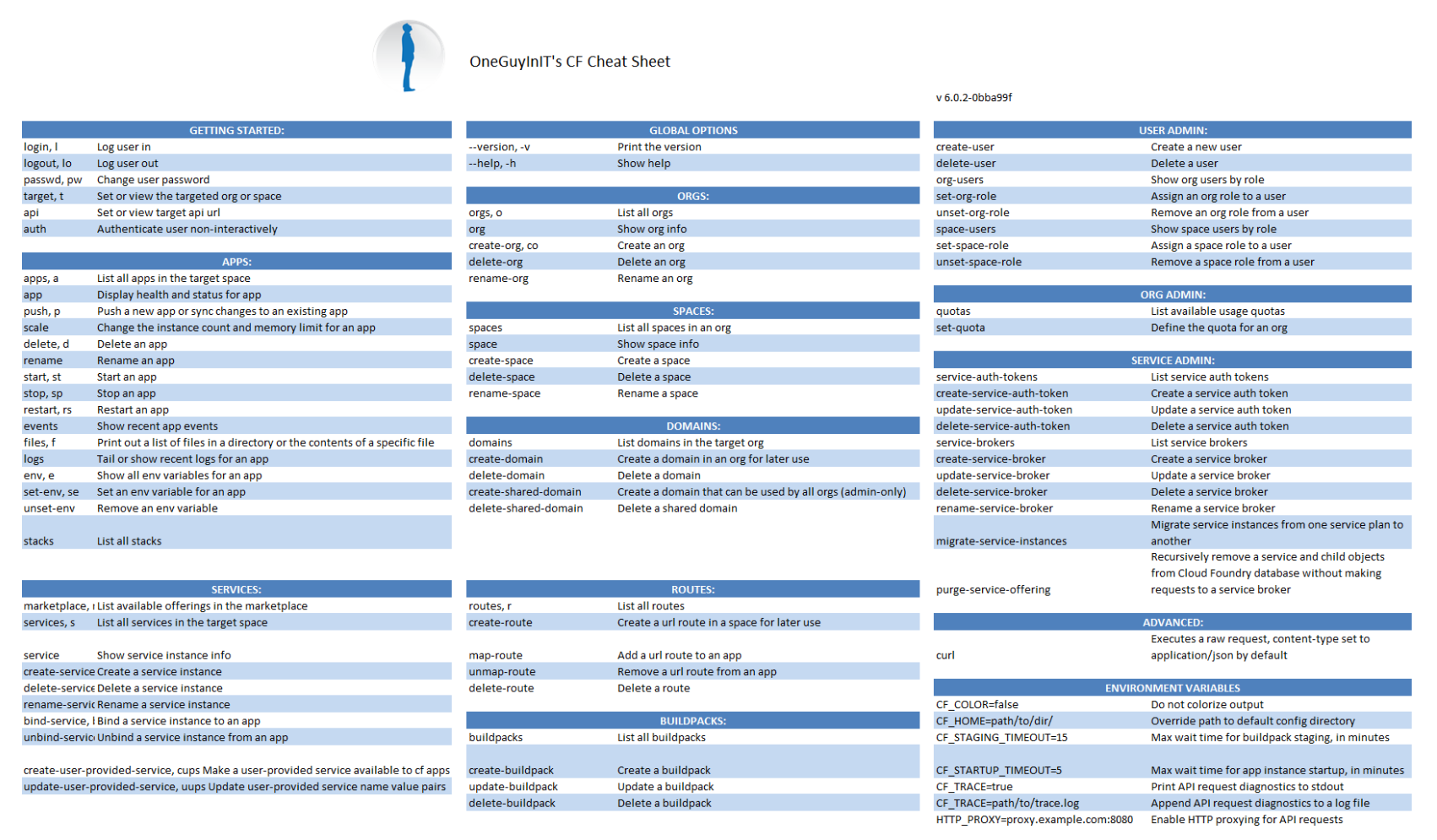

The class I settled on was called ‘Computing for Data Analysis‘, offered via Coursera.org. The reason this class was on my short list was because it applied to my professional life and my personal projects. This class teaches you the basics of the statistical programming language ‘R’. For those of you not familiar with R, it is basically a command line interface for spreadsheets that makes it ‘easy’ to generate graphical representations of your large data sets. My definition is a simplified one that doesn’t do R justice, but for the laymen, I think it does the job.

Professionally, I wanted to be able to have conversations with Data Scientists. My personal projects include manipulating and deriving value from VC/Angel and startup data, and do so in a repeatable fashion. I wanted to be able to take data collected via APIs, excel spreadsheets, and websites to manipulate in order to answer questions. Basically, I have multiple pools of data that I needed to sift through to answer some very specific questions for myself. If you want more details, Jamie Davidson wrote a really cool article that tries to answer “How much venture money should a startup raise to be successful?” He even published his R code so you can reproduce his research — cool stuff! The point being, you need some motivation to get through this (or any) class. If you don’t care about the content being taught, then you aren’t very likely to complete the class. I was fortunate enough to have selected a class that was offing a “Signature Track”, which basically means that the school administering the class, John Hopkins, acknowledges your work in the class and you can add a line item to your LinkedIn page. This wasn’t a motivator for me, but since I already resolved on completing the class, spending the $50 to earn the certificate was a no brainer.

So the class is set on a weekly schedule that goes something like this:

- Weekly video content published.

- Weekly quiz available.

- Weekly programming assignment available.

- Repeat.

Candidly, I’m not a programmer or a developer or a data scientists. I was definitely in over my head, and very thankful to have taken the Python course offered by Codeacademy prior to this course, so I knew a little about syntax and how to ‘talk to a computer’. The 3-5 hours estimated per week was very low for my learning curve. I needed to get up to speed and fast.

Without a doubt, the most difficult part of programming is knowing how to ask your question. Too many questions are asked with hidden assumptions and misaligned expectations. For this reason, the class forum can be a very noisy place and often, if you know how to ask your question correctly, not the best resource to help you get your answer. That’s why official documentation and established forums are my go-to resource for specific questions. The forum is great because it is moderated by class TAs that can help answer theoretical questions or help you rethink your problem to get you to the right question. Lastly, if and only if you know what you don’t know, you can leverage the IRC chat rooms.

So if you have a desire and the right resources available to you, I think you’ll be fine. The last thing I want to part on you is networking. If I went to Grad School, a big reason would be for the network. I want to meet people with a passion for success who are on their way up. The same holds true for online courses. I took it upon myself to start the ‘Computing for Data Science – Class of 1/2014’ group on LinkedIn. If your class is small (under ~50 students), you could just share your LinkedIn credentials, but trying to connect with 70k other students is a big data problem in and of itself… So if you are taking a course, setup a place for your class to network with each other after the class is over.

I would recommend ‘Computing for Data Analysis’ for anyone interested in data manipulation, data science, or big data. It is a Signature Track and also gives you credit toward Coursera’s ‘Data Science Specialization,’ which could be a differentiator on a resume.